Meaningful human control in defence applications

As computers become increasingly smart, they are able to take on more and more tasks from people. At the same time, they are becoming more capable of independent decision making. The big question is how do we – people – make sure that we retain meaningful control?

AI as a repressor? Well, we have not reached that stage just yet. But in many countries all over the world, there are police teams experimenting with predictive algorithms in their detection work. In September 2020, Amnesty International published an alarming report on the subject. It concluded that these AI systems acted in a discriminatory manner that was not apparent to people.

As objectively as possible

So for a computer, it is not easy to filter out human prejudices from the available data. And that is a big problem. After all, if someone is identified as a possible suspect on the basis of an AI prediction, this should happen in an objective a way as possible, and not be based on any kind of preconception.

AI systems as team partners

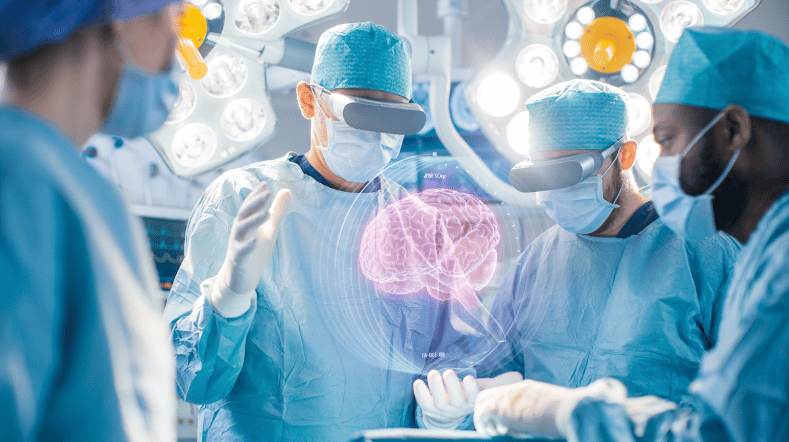

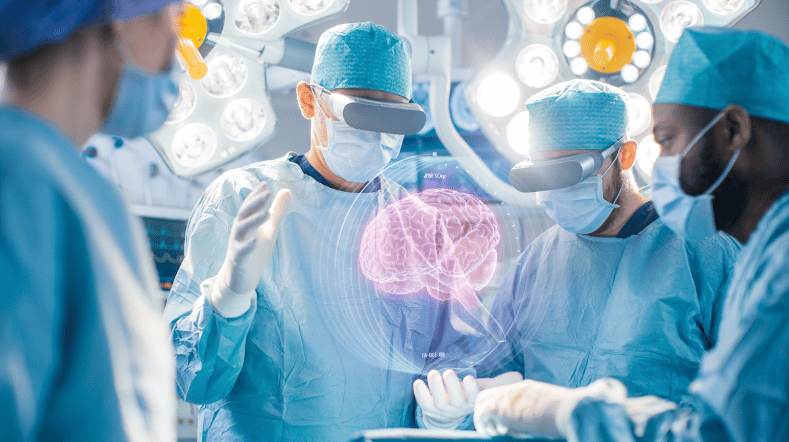

The solution? Put together teams in which AI systems partner people. This means that when it comes to critical decisions, people remain involved and in control. Such interaction between people and AI is essential for developing systems that are able to act ethically on the basis of predictions.

In doing so, people can use the capacities of advanced AI to the best-possible effect by delegating as many tasks as possible at an abstract level. Meanwhile, there is always the option of making operational adjustments. At the same time, AI systems have to be able to explain themselves and actively involve people with the data analysis process. This way, we can prevent AI from drawing conclusions based on unreliable data or erroneous assumptions.

Greater focus on interaction between people and robots

A 'social AI' layer is therefore needed. Working in partnership with the Dutch Ministry of Defence, NATO, and various universities, TNO has already made a number of highly promising advances in this area. For example, there is a prototype of a delegation system that is already being used with interactions between people and robots. We also have a test in which we are able to measure (in a laboratory setting) the degree of human control.

Final responsibility rests with people

The aim is to ensure that people are able to entrust tasks to AI systems in a responsible manner without losing control. In other words, it will still be people who bear final responsibility. There are currently a great number of fields that are ripe for this type of ‘controllable’ AI system. Examples include the police, defence, and the logistics and care sectors. Each of these areas would benefit hugely from AI systems that take over various tasks in a reliable way and without any preconceptions.

Get inspired

Working on reliable AI

AI model for personalised healthy lifestyle advice

AI in training: FATE develops digital doctor's assistant

Boost for TNO facilities for sustainable mobility, bio-based construction and AI

GPT-NL boosts Dutch AI autonomy, knowledge, and technology