Bas Haring on AI, science and philosophy

Michiel van der Meulen, chief geologist for the Geological Survey of the Netherlands (GDN), speaks with Bas Haring. Haring originally studied artificial intelligence, which at the time still fell under the umbrella of philosophy, which is why people started calling him a philosopher. He himself feels more like a ‘folk philosopher’: Haring tries to make science and philosophy accessible to a wider audience. In 2001, he published a children's book about evolution, Cheese and the Theory of Evolution. What better springboard for a geologist and a philosopher to talk about AI?

A conversation with Bas Haring

Why are you interested in evolution?

You like explaining complicated things, like science. What I'd like to discuss in this interview is evolution. Why are you interested in evolution?

'I already found evolution very interesting when I was a kid. But much later, when I got my PhD in artificial intelligence, I became interested in it again because then, with artificial intelligence, you had various algorithms for solving a problem. You started simulating evolution on the computer. When I actually started physically typing out evolution on the computer, I realised that it really is an incredibly simple algorithm that runs in nature and solves problems.

'The theory of evolution lays bare a fundamental flaw in human thinking, namely that we think – structurally – that somewhere there’s an intention behind things. In everything we see, we think “somehow or other it’s meant to be like that”. Evolution shows us that that’s often not the case. There's a mechanism that operates in nature, and things happen. It may seem as if there's an intention behind it, but it just looks that way. And that's what I think is so fine about evolution.'

'All those bodily experiences define my understanding of water. Those bodily experiences a computer has a lot less.'

You’ve deployed evolution to make things better. Are there any limits to that? How smart can artificial intelligence get?

'The funny thing is that we keep on being wrong about that. I'm not going to put any limits on it. Every limit that’s specified always becomes obsolete. But a device that understands things the way we understand things? I can't see such a device doing that. When I take a sip of water, the water has a certain meaning for me. I understand water because I can drink it. It flows through my mouth. All the physical sensations define my understanding of water. A computer has a lot less in the way of those physical sensations. A robot will always understand water in a different way to how we do.

'Sometimes you do want it to understand things. IBM's “Watson” computer program is now used to create recipes. There are now some recipes created by artificial intelligence that no chef would ever have thought up, but they’re really delicious. So you see that AI creates the possibilities and humans select the most interesting.'

Might we gradually also learn something about how our own creativity works?

'I think that what we’re mainly learning is that we aren’t that creative, that we don’t come up with very many ideas, but that such a device does.’

How do you explain to children what AI is?

'In my new book Kunstmatige intelligentie is niet eng [Artificial Intelligence isn't Scary], I build up artificial intelligence from the very simplest level. That’s the computer. It seems like a magical device. But if you delve into the question of what kind of thing it actually is, and you build such a thing in your mind with very simple electronic circuits, then the device becomes a bit less magical. You then begin to understand how it can do certain things. My approach is always to build up very simply and calmly, so that someone really understands what it’s about.'

'I really think you have to take an open-minded view of change. That also applies to artificial intelligence.'

People often find AI scary. Do you?

‘No. I’m very optimistic where technology is concerned. I really think you need to take an open-minded view of change, and that applies to artificial intelligence too. It may seem scary and frightening, but go ahead and delve into it. Then you'll be able to understand it. Understand it first before you form an opinion.'

‘The computer scientists who create that artificial intelligence are only trained to think about ethics and responsibility to a very limited extent. The programmers have a responsibility, but so do the people who select the data. In the case of the Dutch childcare benefits scandal, that didn’t go well at all. At the point when you feed a system with data that include people's nationalities, you can bet that it'll discover relationships between nationalities and what you're looking for. So you shouldn't input those data. I'm developing a course on ethics and artificial intelligence for next semester. If you're working with artificial intelligence, you need to be aware of the consequences it can have. That’s your responsibility as a data scientist or an information scientist.'

‘The theory of gravity. AI might have discovered that relationship faster than humans, but the system isn't going to discover the story behind it.’

How can AI help science to advance?

'Artificial intelligence is very good at finding patterns in data, and that can help us. Take gravitational theory, for example. AI might have discovered that relationship faster than humans, but the system isn't going to discover the story behind it. Those systems can indeed help us discover certain connections sooner, but they can’t discover theories. They also can't formulate causal relationships, so they won't help us very much with that.'

We develop and use AI at TNO. Do you have any tips for us?

'There’s so much more data and things are so much faster than they used to be, so you can now apply a lot of AI. I would just use it and reuse it, because that's what’s great about AI. You can simply use modules that other people have written. It's a very strange way to create software, but it’s a very modern way to do so. Reuse what other people have done for you and get down to work with it. But do be aware that those things very often involve bias.'

Download vision paper

Download vision paper ‘Towards Digital Life: A vision of AI in 2032’

More about 'Towards Digital Life: A vision of AI in 2032'

- David Deutsch on the development and application of AI

- Georgette Fijneman on the promise of AI for health insurers

- Rob de Wijk on the rise of AI in geopolitical context

- Bram Schot on the impact of AI on mobility

- Eppo Bruins on AI in different government domains

- Arnon Grunberg on AI, creativity and morality

Get inspired

Working on reliable AI

AI model for personalised healthy lifestyle advice

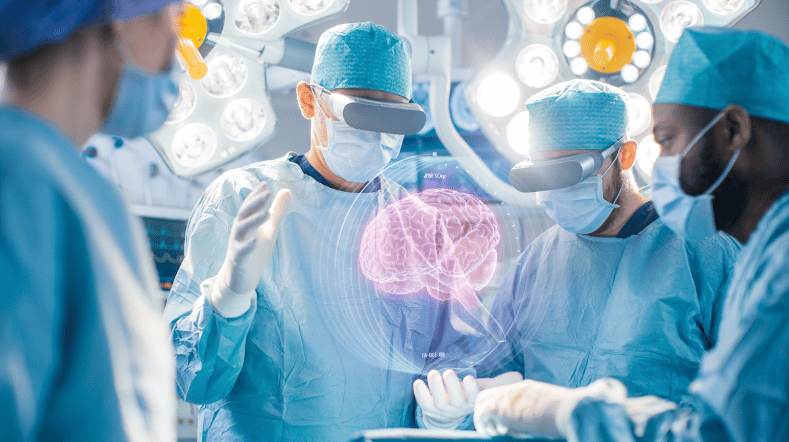

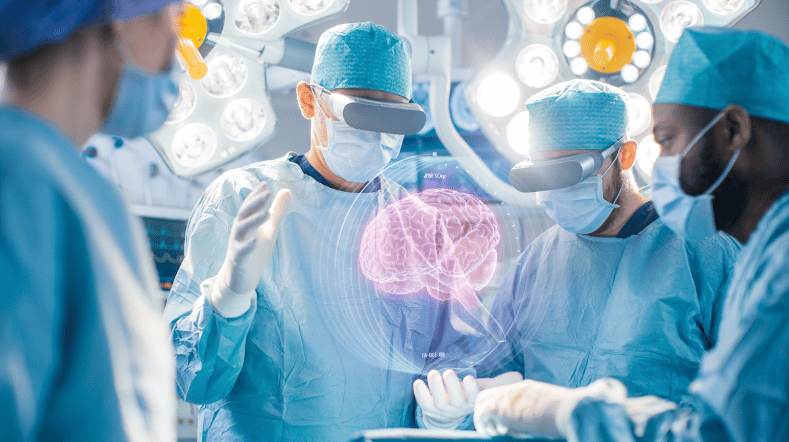

AI in training: FATE develops digital doctor's assistant

Boost for TNO facilities for sustainable mobility, bio-based construction and AI

GPT-NL boosts Dutch AI autonomy, knowledge, and technology