Safe co-drivership with AVs requires advanced Human-Vehicle Interaction and Driver Monitoring Systems

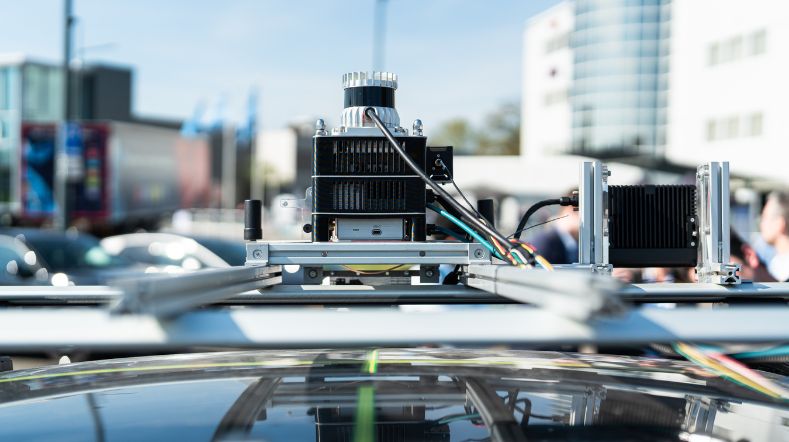

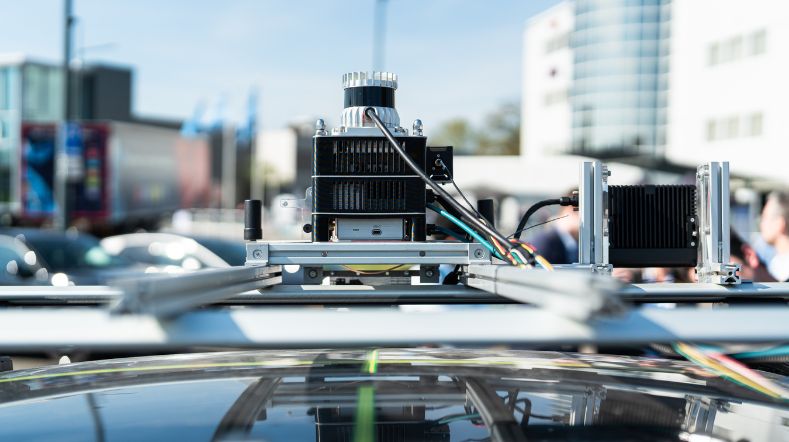

With the rise of Advanced Driver Assistance Systems (ADAS) and Automated Driving (AD) systems on our roads, Driver Monitoring and Human-Vehicle Interaction have become crucial to keeping drivers involved and safe. New European laws and safety standards raise the bar for the industry but also challenge national authorities to translate abstract guidelines into concrete specifications for type approval. TNO’s Human Factors expert, Jan Souman, explains the current state-of-the-art in achieving safe co-drivership, and why the driver’s role is becoming more, rather than less, important in automated vehicles.

‘The automotive industry faces three trends that could impact driving safety,’ warns Jan Souman, Senior Scientist in Human Factors of Automated Driving. ‘First, as driving becomes more automated, drivers are transitioning from active operators to supervisors, which threatens driver alertness when they must suddenly take control. Second, modern vehicles combine various automation levels, leading to potential mode confusion—drivers may be unsure which system is active and what the car will do. Lastly, smartphone addiction poses a dangerous distraction, further compromising the ability to take control of the vehicle.’

Meet the expert - Join one or more free and online sessions

What are the milestones on the path to safe automated driving? Join one or more free online sessions and meet the experts.

Two-way strategy for safe co-drivership

To address these challenges, industry and science focus on two conceptual solutions, still with a lot of unknowns: Driver Monitoring Systems (DMS) and Human-Vehicle Interaction design. Souman explains, ‘By closely monitoring the driver, the vehicle can assess if they are fit to perform driving tasks. For example, Level 3 Automated Lane Keeping Systems (ALKS) allow you not to watch the road, but you can’t fall asleep or use your smartphone. Cameras monitor this and alert the driver when necessary.’

The second approach is enhancing Human-Vehicle Interaction design by optimising and standardising controls and information. ‘For instance, clear visual icons can indicate the vehicle's current driving mode, reducing risks.’

Advanced Occupant Monitoring

Driver and Occupant Monitoring technology is rapidly evolving. Previously, vehicles had basic systems like seat sensors to encourage seatbelt use. Now, seatbelts can also have sensors to measure heart rate and breathing. Advanced in-cabin monitoring is exploring radar and ultra-wideband (UWB) applications. However, most systems currently rely on cameras, especially with new legislation requiring vehicles to detect distraction via Advanced Driver Distraction Warning (ADDW) systems by 2026, often using Near-Infrared Cameras. Additionally, Stereo and Time-of-Flight (ToF) cameras are being explored to create 3D images of occupants, enhancing onboard safety systems.

DMS becomes mandatory

ADDW isn't the only new legislation impacting OEMs and Tier 1 suppliers. This year, Driver Drowsiness Attention Warning (DDAW) systems became mandatory in the EU for all new vehicles. Legislation for Driver Controlled Assistance Systems (DCAS), requiring Driver Monitoring Systems, is also in development. Euro NCAP is expanding its safety guidelines for in-cabin monitoring as part of its ADAS rating, with 2026 seeing ratings based on Child Presence Detection, Posture, and Driver Under Influence detection. Future plans include Occupant Status Monitoring and Cognitive Distraction detection.

This wave of regulations challenges both the industry and national authorities. Jan Souman notes, ‘DMS legislation often lacks specific requirements, leaving room for interpretation. At TNO, we assist national road and vehicle authorities in interpreting this legislation and translating it into specific requirements for type approval requests.’

‘It's crucial to keep the driver involved in the driving process, even at the highest levels of automation.’

Safe under all circumstances?

The greatest challenge with DMSs is ensuring safety in all circumstances. Souman says, ‘Developing and testing these systems in controlled labs doesn’t guarantee safe operation in real-world conditions. Most systems are designed for average-sized drivers and common situations. But what if your posture isn’t average, or you’re driving in difficult light conditions?’

And there’s another problem. Originally, systems like Drowsiness Detection assisted drivers in staying alert while they remained in control. Now, these systems are essential safety features; what if it incorrectly determines the driver is awake and transfers control in what most likely is a critical situation?

‘The challenge lies in assessing the vast number of variables. A collective effort is needed to strategically identify and study the key factors, ideally through a joint research project.’

Multiple parameters for accuracy

TNO specialises in advanced assessment methods for DMS, combing expertise in cognitive psychology and vehicle automation. ‘To enhance DMS effectiveness and accurately determine driver state, we need more parameters and advanced analysis methods. Beyond simply asking the driver how they feel, we can use physical parameters like heart rate, breathing, pupil size, body movements, and temperature. However, we must accept that some uncertainty will always exist. Collaboratively, we need to define an acceptable level of uncertainty.’

TNO is involved in the EU project Aware2O, focused on improving the safety of highly automated driving systems, including DMS’s. ‘Our focus is on situational awareness—how well the driver understands the situation inside and around the vehicle at any moment, and the implications for the driver. This is key to driver monitoring in automated driving: Is the driver aware of all relevant information? This is complex because we can't look inside someone's head, so we try to infer this from behaviour, such as eye movement.’

Interdisciplinary knowledge is key

TNO's interdisciplinary expertise makes it particularly well-suited for research in Human-Vehicle Interaction and Driver Monitoring, Souman explains. ‘Human interaction with vehicles requires expertise in physics, psychology, system engineering, AI, and vehicle behaviour. At TNO we offer a unique combination of knowledge, facilities and experience.’

TNO's Soesterberg facilities specialise in human factor research and physical measurements like EEG and eye-tracking. The newly developed open innovation centre, MARQ in Helmond, offers advanced research and testing facilities for Connected, Cooperative, Automated Mobility. It includes one of the most advanced driving simulators, featuring the latest eye-tracking technology, a modular setup for different DMS types, and 360-degree projections for various HMI simulations.

What’s next?

So, how should we proceed? ‘It's crucial to keep the driver involved in the driving process, even at the highest levels of automation,’ says Jan Souman. ‘Smartphones are already a major road safety issue. If we remove driver responsibility completely, the consequences are clear. We need to find natural ways to include drivers in the process. Additional research is essential to ensure safe DMS operations in all situations. Collaborative research, with industry working together, is key. At the same time, we should try to bridge the gap between industry and authorities to effectively introduce AVs on our roads that offer safe co-drivership. TNO can add great value in both areas.’

Get inspired

How is YER award winner Chris van der Ploeg doing?

TNO launches Motion Comfort Institute for automated vehicle era

Releasing autonomous software faster with DeepScenario and TNO’s StreetWise

MARQ opens its doors: a place to collaborate on the mobility of the future

Demonstrations of automated driving and charging for logistics at Maasvlakte