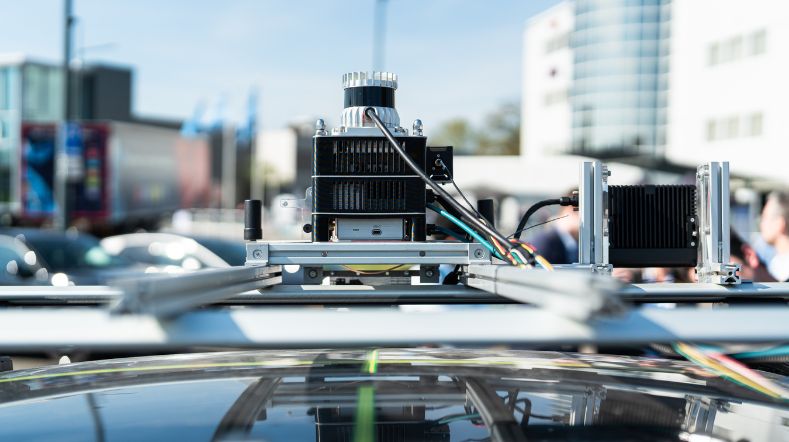

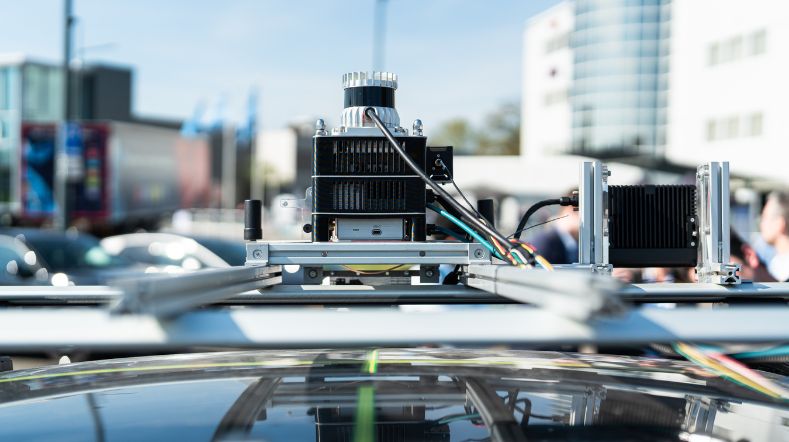

Is it safe for self-driving cars to hit the road yet?

How do we ensure that self-driving cars are safe? That is the big question researchers have been pondering since 1980. Although aids such as cruise control and automatic braking have made driving easier and safer, the fully self-driving car still seems far away. Where do we stand in this development? What steps do we still need to take to allow self-driving cars to safely hit the road? That is what TNO is investigating.

Automatic system as driver

Volvo once predicted that cars would be self-driving by 2010, but more than a decade after that, we still need a human driver behind the wheel. Indeed, until 2021, global traffic rules were fully based on the concept of a human driver. This made the earlier advent of a self-driving car impossible. ‘It was not until 2021 that these rules were amended so that a driver could also be an automatic system. But the fact that this regulation is in place does not yet mean that self-driving cars can safely take to the road’, says TNO's Erwin de Gelder.

What is safety?

'If we want to determine whether a self-driving car is safe, we first need to look at what safety actually is’, says Olaf Op den Camp of TNO. There are two complementary concepts by Professor Erik Hollnagel from 2015 that we use for this purpose, called Safety-I and Safety-II.

Safety-I and Safety-II

Safety-I involves making sure that as few things go wrong as possible, for example by preventing collisions. Meanwhile, the definition of Safety-II aims to achieve that as many things as possible go right. This Safety-II concept should also be included in the development of self-driving cars. That is why we are always looking at what self-driving cars should be able to do under different conditions.

Safe driving

With these concepts, we have established what safe driving means, namely: avoiding potential collisions (Safety-I) and 'normal', attentive, social driving, showing predictable behaviour and being able to properly anticipate situations (Safety-II).

Meet the expert - Join one or more free and online sessions

What are the milestones on the path to safe automated driving? Join one or more free online sessions and meet the experts.

Problem with AI

We want self-driving cars to meet the same requirements that human drivers have. But therein lies the big challenge. People and systems work fundamentally differently. ‘An AI system learns to recognise pedestrians through pixels. But on a symbolic level, AI knows nothing about what a pedestrian actually is, in the way that humans do. So how can we be sure that the system will recognise a pedestrian in all circumstances?’ asks Jan-Pieter Paardekooper of TNO.

The same problem applies to traffic rules. Paardekooper: ‘People do not always behave consistently in traffic. An AI system can learn various examples of human behaviour in traffic, but it cannot derive traffic rules from that. So, if the AI system encounters a situation it has never seen before, the system cannot reason about what to do in that new situation.’

People are always learning

Another big difference between people and a system is that people are always learning. ‘We can adapt well to new situations. For example, we will drive slower on a road surface full of potholes’, says Sjoerd Houwing of the Dutch Central Office for Motor Vehicle Driver Certification (CBR). ‘Self-driving cars are pre-programmed and cannot react or adapt to unforeseen circumstances.’

Preventing human error

So does an automatic system have only disadvantages? The answer to that question is ultimately no! Self-driving cars will not drive while being drunk, intoxicated, tired or distracted. This is an advantage that will allow us to avoid many human errors.

‘We should aim for a self-driving car that drives even better than humans drive on a ‘good day’’, says Mikael Ljung Aust, PhD at Volvo Cars Safety Centre.

Higher standard for self-driving cars

It doesn’t end however by formulating this ambition. We of course need to test the self-driving car and check whether it fulfils our expectations of safe driving.

‘Safe self-driving cars should be subject to a higher standard than human drivers’, says Houwing, researcher at CBR.

‘Human drivers must demonstrate that they can deal with everyday situations. In contrast, when it comes to self-driving cars, we need to test whether they can consistently deal with different types of situations, without the need for continuous learning, because they cannot learn while driving as humans can.’

Competent driving

But how do we test whether a self-driving car can drive properly everywhere? ‘A car programmed to drive in an extremely crowded city, such as Lima in Peru, will not do well in Utrecht in the Netherlands, and vice versa’, according to Jeroen Hogema of TNO. TNO is therefore working with CBR and the Netherlands Vehicle Authority (RDW) on a way to make safe competent driving behaviour measurable.

Different testing methods

How do we know what requirements competent driving must meet? We got those rules from driving guide books from different countries. We then used drones to watch traffic on the highway and compare the driving behaviour we saw there with the rules in these books. We also had study participants drive a car, with a CBR examiner next to them. The examiner assessed the participants on competent driving.

‘These methods of measuring competent driving behaviour are part of a process we are still working on and continuously improving’, says Hogema. Once we know what competent driving behaviour is in humans, we can more easily apply it to a system.

Combination of learning and reasoning

We have come a long way towards making cars self-driving, but there are still quite a few bumps in the road. Without the power of reasoning, self-driving cars remain unsafe. An important step is to combine an AI system that can learn well with one that can reason why it does something. That may be where one of the key solution lies that will get self-driving .

Want to know more about TNO's research on self-driving car safety? Download the StreetWise position paper 2024.

People and systems work fundamentally differently. ‘An AI system learns to recognise pedestrians through pixels. But on a symbolic level, AI knows nothing about what a pedestrian actually is, in the way that humans do. So how can we be sure that the system will recognise a pedestrian in all circumstances?’

Get inspired

How is YER award winner Chris van der Ploeg doing?

TNO launches Motion Comfort Institute for automated vehicle era

Releasing autonomous software faster with DeepScenario and TNO’s StreetWise

MARQ opens its doors: a place to collaborate on the mobility of the future

Demonstrations of automated driving and charging for logistics at Maasvlakte